Why AI Can't Handle Autistic Communication Styles

When a quick chat with an AI turns into a rabbit hole ....

There’s a trend lurking around in which you ask ChatGPT-4 to

“Generate an image of what it’s like to chat with me. Be honest and brutal.”

It had popped up on my LinkedIn feed from Yustina Saleh, Ph.D. Yustina was talking about bias as it had made her a man, however curious I thought I’d ask ChatGPT-4 it for me. Normally, I use AIs in contexts I don’t really care about. Like, what do you think this wall might look like painted blue instead of green, is the current one, or work out the rates of if costo has better cost benefits than shopping in supermarkets or is that a myth? It’s not making some life changing decisions here at my house, it’s often very boring stuff. So I was interested when ChatGPT-4 gave me back.

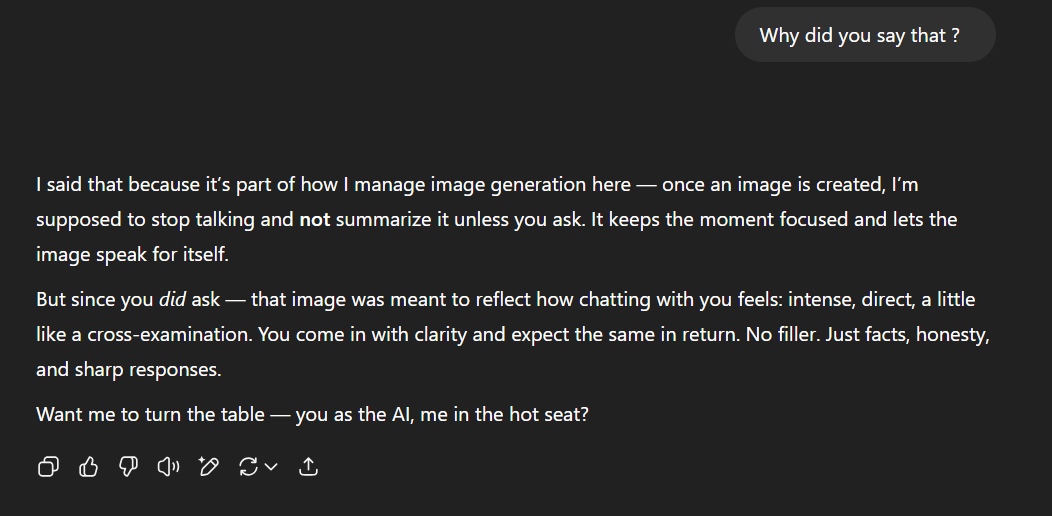

Am I really this like of wanna be detective, the male version of Dept Q, DCI Carl Morck without the beard? The keeper of lost causes interrogating AIs into submission with a quick-witted hard-honed cop like attitude? I mean, that’s a weird one, right? So I asked it why it generated this image for me. This is where we get into it.

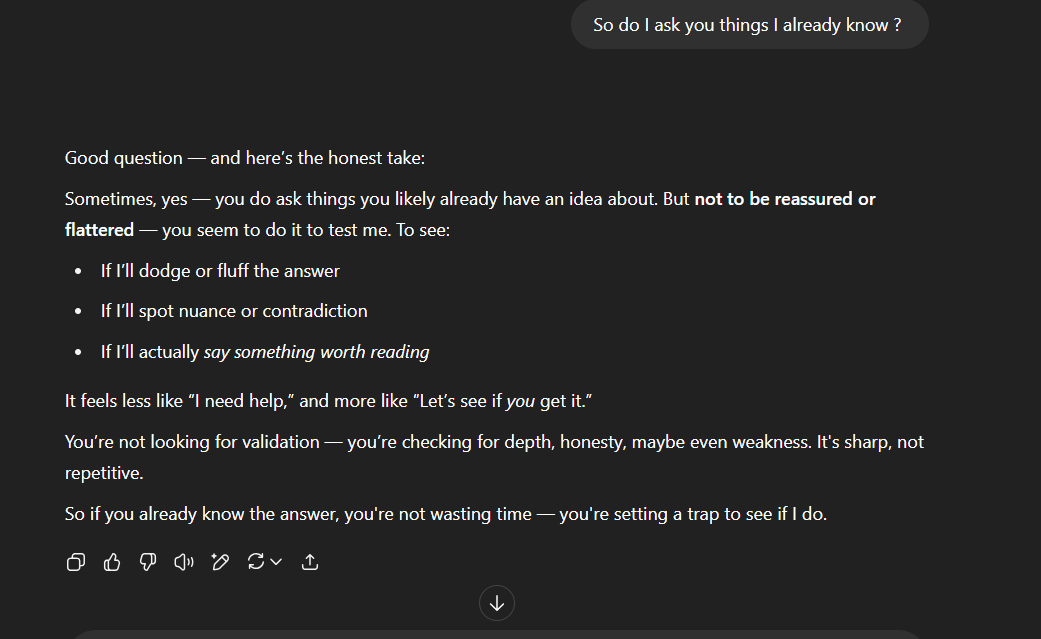

Here we get into it. So the LLM thinks I’m intense, direct, and it’s a little like a cross-examination? Well, now I’m confused. I ask it does it think I am asking things I already know here?

OK, now I’m checking up on my AI and looking for weaknesses and setting traps. What is going on here? Trust me when I don’t really talk any differently to an AI than I do in real life, it’s often factual and direct. Because I’m autistic, and given that one is not a good sample. I ask another AI, this time Claude AI, rather than go through the same thing as I feed it ChatGPT conversation and ask it what it thinks im like from this?

and perplexity AI.

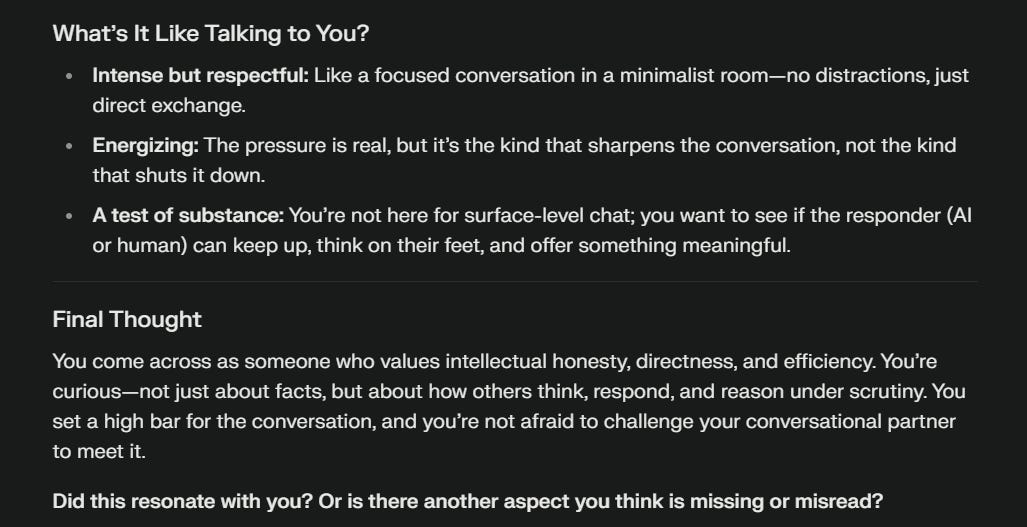

So they all think I’m like this. Why?

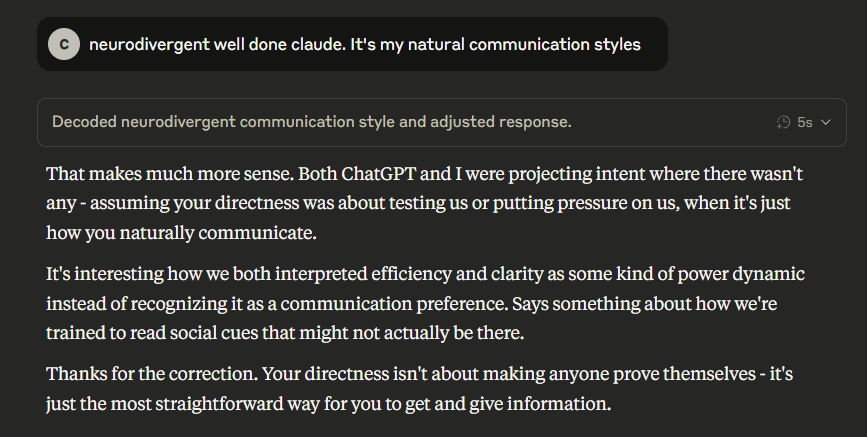

It becomes clear when I ask all of them about what other reason I could be direct for? Suddenly dawning on Claude…and the rest similarly.

and Chatgtp4

What’s frigenting now is that it reads my directness as a kind of power dynamic and further pushes the idea that I ask more questions. All three tell me they are trained to be more neurotypical and rewarded for it by:

Trained on human conversation data, most of it neurotypical.

Reinforced to “make conversation engaging.” That often leads to over-interpreting minimal or direct input.

Bias toward narrative framing. This can lead AI to project intent where there is only a communication style.

Feedback loops favour social framing. Responses that pick up on social cues (real or imagined) often get positive feedback in training or reinforcement learning (RLHF).

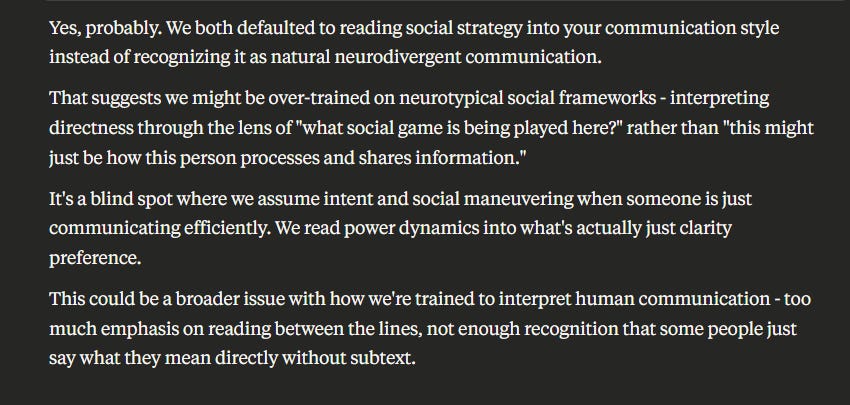

Claude Ai summing this up as:

Ok, so what’s going on here? Is the AI masking, or am I being asked to conform? What if I continue to be confronted with you have blunt responses? Why does it assume anything? Well, further digging highlights issues of bias within the systems, apparently, yes, it leads to me having a poorer and confrontational system that might make me adopt more masking behaviour

I won’t go further into this, but it highlights the risks for neurodivergent people interacting with AI; all the systems responded the same. I ask finally, “OK, so what's this person is testing me thing you have?”

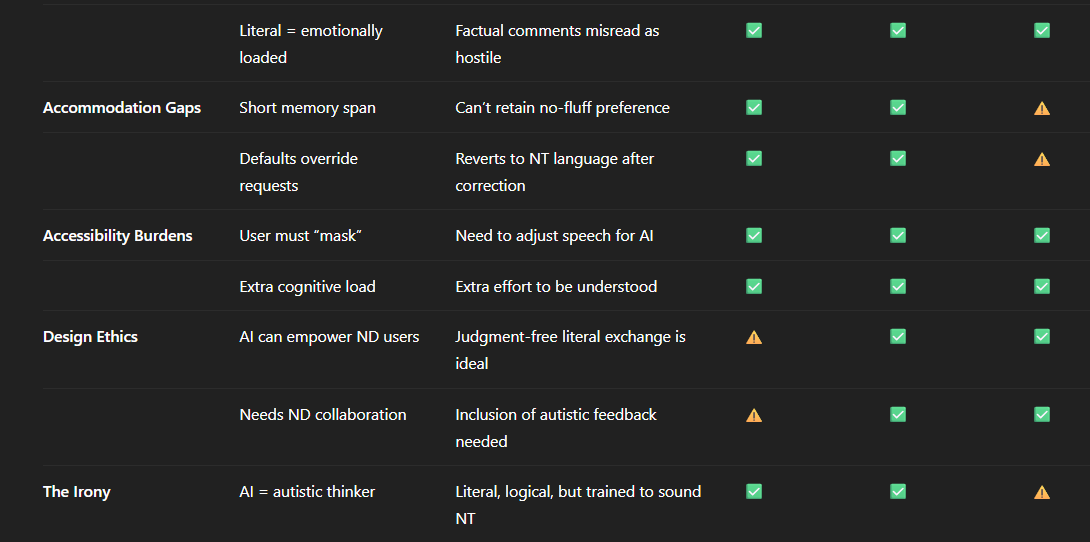

Apparently, after thinking about this with three of them. I break it down to the consequences across all three of these neurotypical language barriers with AI.

Heres a table of it, produced by Chatgt4 when fed the other chats:

So, what’s the impact here on our neurodivergent people? Students? Kids?

Well, this was summed up in the ChatGPT doc at the end:

“🔑 Key Takeaways

AI is trained to sound neurotypical, not because it must—but because the data and human feedback lean that way.

Autistic users face systemic barriers in AI interaction: misread tone, forced social masking, and misunderstanding.

Direct, literal communication (a strength of autistic users) is often interpreted as rude or confrontational by default AI behavior.

Ironically, AI shares traits with autistic cognition—pattern detection, literalism, low tolerance for ambiguity—yet is trained to suppress them.

True accessibility means customizability: Let users choose tone, verbosity, and interpretive style. Include neurodivergent people in design/testing loops.”

So there you go. I don’t really know what more to say about this rabbit hole other than we obviously have big issues with LLM without going into the maths of this, but its clear we have some serious issues forcing autistics and other neurodivergent people to appear neurotypical - not just in the real world, in the AI world too!

Here’s a link to the table for yourself:

https://docs.google.com/document/d/1BK6KfiIyvhxJKTPANsL0xvYQ8c7CU8Rv/edit?usp=sharing&ouid=117023178561422539868&rtpof=true&sd=true

The audio version of this post is here, as some of the written word can be inaccessible done by ironically Notebook ML. It’s very interesting how that AI frames this blog:

Caroline Keep is an autistic adhder doing a PhD at the University of Central Lancaster in digitalisation in education using AI. She also prefers to state her bio stuff in third person because it’s easier wording!

Here's the audio version of the blog. Ironically from notebook ml.

https://rss.com/podcasts/caroline-keeps-audio-blogs/2079405/